Welcome Back, Move Fast & Break Things

The AI talent war isn’t just about AGI - it’s about power, product, and the platforms being rebuilt beneath us.

A few months ago, Meta quietly started reaching out to a handful of AI researchers with an unusual offer: “Hey, how about $100 million to join our new superintelligence lab?”

That wasn’t a typo. That was Meta CEO Mark Zuckerberg1 - personally DM-ing engineers about joining what insiders now call The List: a secret recruitment file of the most elite minds in AI. The ones who really understand how to push the limits of large models, reasoning, and memory. The ones that, apparently, are now worth more than top NBA players or Messi.

But if you’re a startup founder or a product lead trying to build anything serious with AI, you might be tempted to roll your eyes. “Cool story, Zuck. Can we get back to real-world budgets now?”

Don’t. Because what’s behind this hiring arms race holds real lessons for everyone trying to build with AI.

🧨 How the War for AI Talent Just Went Nuclear

Something big is happening and it’s moving fast: Over the past months, Meta has quietly launched one of the most aggressive talent raids in tech history. According to new reports from The Information2 and The Wall Street Journal3, Meta has hired at least 13 top AI researchers, including elite scientists from OpenAI, Google DeepMind, and Anthropic. They’ve also created a new internal division, Meta Superintelligence Labs4, and named Alexandr Wang as Chief AI Officer, alongside Nat Friedman leading product (announced on Tuesday).

Among the recent hires: Shengjia Zhao, Jiahui Yu, Shuchao Bi, and Hongyu Ren, key architects of OpenAI’s fast reasoning models. Others include Lucas Beyer (confirmed on X5), Alexander Kolesnikov, and Xiaohua Zhai, the Zurich-based trio who built OpenAI’s multimodal pipeline after leaving DeepMind.6

They are the people shaping the frontier, model theorists, alignment hackers, memory engineers. And yes, we’re tracking who’s making the jump.

👉 Check the Appendix at the end of this blog for a full list of top AI researchers Meta has already poached.

Why the urgency? Meta’s last major model, LLaMA 4, underwhelmed. Zuckerberg’s solution isn’t just another training run, it’s a full reboot of his AI strategy. He’s chasing superintelligence. And to get there, he’s building the dream team. Sometimes personally. Sometimes from Lake Tahoe.

It’s about power. The researchers Meta is recruiting are the ones who quietly built the next frontier: memory systems, multimodal alignment, long-context reasoning. The kind of tribal knowledge you can’t copy-paste from GitHub. And now they’re jumping ship.

As Alexandr Wang said in a recent interview7, “Even if you’re just 3–6 months ahead, once AGI is live, it develops itself at higher speed. It never stops. That’s when you rule the business world - like Google did with search.”

This is the moment where infrastructure stops being the moat. The moat becomes the people, and the exponential head start they give you. That’s why Meta is rewriting its org chart and throwing $100M offers at top minds. Because this is it.

The game has started. And nobody wants to be second.

The Strategic Game: Why Talent, Timing & Architecture Decide Everything

AI is a platform shift and also a strategic frontier in geopolitics, in software, and in global market in general.

🔭 The Global AI Arms Race

In both tech and geopolitics, AI has become a full-blown arms race. Governments (especially the U.S. and China) and the top tech companies are investing billions to win the race to Artificial General Intelligence (AGI), the moment when machines can reason, learn, and improve themselves across domains, like a human.

In the Shawn Ryan Show, Alexandr Wang emphasized that even a 3–6 month lead in AGI could compound into an unassailable advantage once models begin self-improving on their own.

It’s strategy. And there’s historical precedent:

First-mover dominance: Google became a monopoly by catching the wave early. Now imagine that same dynamic playing out across every industry.

Exponential loops: Self-improving systems iterate faster than any human-driven R&D. Lagging even by months can mean permanent disadvantage.

Nation-state urgency: A 2024 paper by Dan Hendrycks8 describes how the U.S. and China treat AGI as a “nuclear-like” capability, complete with secrecy, alignment issues, and geopolitical implications.

So yes, the war for talent is now a matter of statecraft, and every elite researcher is, essentially, a national security asset.

What Exactly Is AGI - And What Is Superintelligence?

Lately, Meta, OpenAI, and Anthropic have begun using “superintelligence” more often than “AGI”, signaling a shift from pursuing human-level AI to aiming for systems that surpass it.9

AGI (Artificial General Intelligence)10 refers to an AI system capable of performing a broad range of cognitive tasks across multiple domains -at human-level proficiency -without retraining. It must be able to:

reason

learn

and handle unfamiliar situations autonomously.

According to Stanford HAI, AGI would need robustness, cross-domain adaptability, and independence.11 While models like GPT-4 or Claude 3 excel at specific tasks, they still require human guidance, struggle with generalization, and lack true autonomy.

Superintelligence, a concept from Nick Bostrom’s Superintelligence: Paths, Dangers, Strategies,12 refers to AI systems that far exceed human cognitive performance in all domains. Such systems would not only reason better than humans but also self-improve recursively, out-pace human development, and potentially dominate strategic decision-making.13

This distinction becomes crucial as strategy shifts:

Meta’s research unit is named “Superintelligence Labs”,14

OpenAI discusses “AI systems that are smarter than humans”,15

Anthropic focuses on aligning advanced post-AGI systems.16

In essence, AGI represents the human-equivalent milestone; superintelligence represents going beyond and potentially leaping ahead. The former will reshape industries. The latter could reshape civilization.

🖥️ LLMs: The New Operating System

Back to the arms race and the mission to reach this next level of AI (AGI, superintelligence, … whatever17). As mentioned this isn’t just a geopolitical game. The same dynamic is unfolding across the entire global economy. Every company - whether it builds AI or not - is now part of the race. Why? Because AI is quickly becoming the new operating system of business itself. Just as nations compete to control AGI, companies must now prove they won’t be outpaced or replaced. If AI rewrites the rules, then every industry built on software is up for disruption, and the new rule is simple: adapt or get overwritten.

In his Y Combinator talk18, Andrej Karpathy reframes large language models (LLMs) as the new operating system of software. He calls this “Software 3.0”, where apps are not programmed through code, but through data, prompts, and feedback loops.

This shift is architectural. Most software today - from Jira and LinkedIn to Salesforce - was designed for structured input, menus, and logic trees. LLM-native tools, in contrast, can reason, personalize, and adapt.

This is why a growing wave of developers and investors believe the entire software stack will be rewritten from scratch—unlocking a market potential of $30 to $50 trillion.19 That’s not just large; it’s historically unprecedented. No previous technology wave - not the internet, not smartphones, not even industrial automation - has approached this scale. In that context, UBS’s Chief Investment Officer calling AI “the most profound innovation and one of the largest investment opportunities in human history” no longer sounds bold.

Not everyone agrees on the OS approach. My partner Andrej Vckovski offers a grounded critique: LLMs alone won’t drive the full revolution. Without traditional ML - like classifiers, optimizers, and structured data systems - many “AI-first” apps risk becoming brittle GPT wrappers.

He thinks the future belongs to teams that can:

Blend LLMs for reasoning

Embed ML for structure, accuracy, and guardrails (think accounting or meteo prediction)

Wrap it all in systems that improve with every user interaction

Bridging the Gap: Software 2.0 vs. Software 3.0

But not everyone agrees that LLMs alone will rewrite the stack. Some think the shift is architectural, but not total. Here’s how we see the debate inside StudioAlpha. In our internal discussions, we return to a key question: What’s the difference between Software 2.0 and Software 3.0? This debate comes down to two perspectives: the technical foundation versus the paradigm shift in how we build and use software.

The Technical Reality (Vckovski’s View):

From a foundational standpoint, the traditional tech stack (OS, cloud, VM, AI models, and databases) remains firmly in place. These layers are essential for any application to function, providing the infrastructure and stability that businesses rely on. In other words, we’re not throwing away the old stack; we’re building on top of it.

The Paradigm Shift (Karpathy’s View):

Andrej Karpathy’s vision of Software 3.0 isn’t about replacing the technical layers. Instead, it’s about a shift in how we create software. In this new paradigm, AI models become the core of the application logic. Instead of coding every rule and function, developers train models on data, allowing the software to learn, adapt, and evolve. This is a fundamental change in the development process and how we think about software’s role.

Why Both Perspectives Matter

For Startups 🍼: Karpathy’s vision is especially relevant for startups. When you’re building something new with a small team, focusing on AI as the core logic can be a game changer. It allows for rapid iteration, data-driven improvements, and unique product experiences that set you apart.

For Corporates 🧟♂️: For larger enterprises, Vckovski perspective is reassuring. The foundational technology remains stable and reliable. Corporates can integrate AI into their existing systems, enhancing their capabilities without needing to overhaul their entire infrastructure.

The Bottom Line: Both perspectives are valuable and coexist in today’s tech landscape. The stack remains, but the way we leverage it is evolving. For startups, the focus is on innovation and agility, while corporates prioritize stability and integration. In the end, understanding both helps us navigate the exciting shift towards AI-driven software.

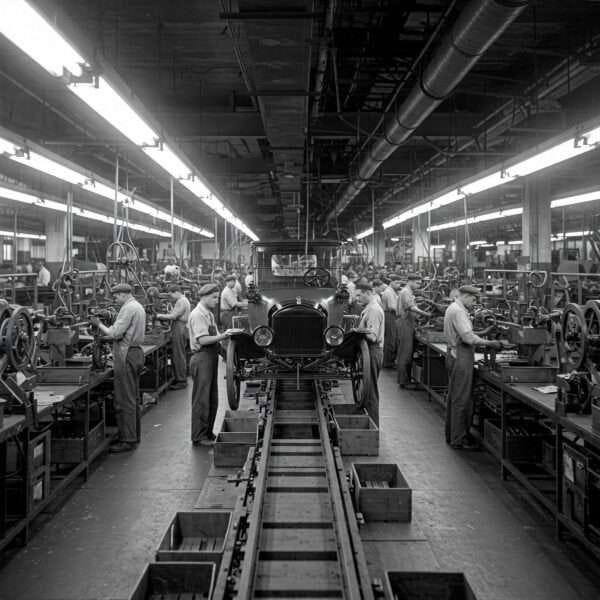

Still, we both agree on the outcome: Within 5–10 years, today’s legacy apps - Jira, Salesforce, LinkedIn - will be replaced by systems designed from the ground up for this new logic layer. As history shows: the best carriage maker rarely becomes the best car company. When we will look back, we will see the biggest business disruption in humand kind.

For founders 🍼: The stack is mostly rented.

your product

your UX

your data loop

and how you orchestrate the AI

… that’s where you win.

Forget AGI - Win Your Vertical

Yes, there’s an AI arms race … but unless you’re a hyperscaler, you’re not racing OpenAI or Anthropic. You’re racing for focus, execution speed, and user love.

Foundational model building is now a trillion-dollar game. But vertical AI - domain-specific products built with deep workflow insight - is wide open. That’s where early-stage teams win. Not with model innovation, but with product sharpness, data leverage, and customer obsession.

Let the hyperscalers fight over AGI. You’re building something more immediate: AI that actually works for real people doing real jobs.

What We See Working

More than half of new AI-native startups are doing exactly that:

VC conviction is high: Reports from Sequoia20 and a16z21 show that over half of the top new startups in generative AI are rebuilding categories from scratch, not layering LLMs on top of old systems.

Real-world products are scaling fast:

Replit is redefining developer environments with AI copilots

Perplexity is gaining ground on Google with a conversational, answer-first search model

Hebbia and Iqidis go deeper than first-wave tools like Harvey by using proprietary data and deeply integrated reasoning engines

Notion AI is pushing into intelligent workspace territory, blurring the line between docs, PM tools, and personal agents

What Founders Actually Need

As the Financial Times22 put it, “data centers and chips are commodities; talent and data are the only defensible edges.” But you don’t need a 50-person FAIR lab. But even with a 2–3 person team, you still need role clarity.

Here’s what we see in the best early-stage AI teams:

One person who owns the user + product loop

One person who can reason about models + prompts + APIs

One person who can ship and maintain infrastructure (even if part-time or outsourced)

Everything else - fancy titles, big teams, AGI moonshots - can wait.

Your real moat?

Speed of iteration … hmm, talking about speed: Speed of iteration is everything. And yet, I’ve seen it for 25 years: people consistently underestimate what speed really means. Especially at the earliest stage, you need to move like your life depends on it, because your startup’s life actually does.

If someone’s more focused on signaling work-life balance than delivering, they’re probably better off at Siemens or Accenture 🛻. And that’s fine, but this isn’t our kind of game 🏎️.

Access to niche workflows or proprietary data

The trust you build with 100 power users

And eventually, the architecture you control around the model, not the model itself

🚨 Don’t Get Distracted by the $100M Myth

The Meta/Altman/Wang war for top-tier AGI researchers is a different game. Interesting? Yes. Relevant to you? Not yet.

Think of Paris Saint-Germain.23 For years they were a “team of stars” - Messi, Neymar, Mbappé - and still couldn’t win the Champions League. In 2025, they finally did. Why? Because they became a star team.

The same goes for startups. And about money: I know … money doesn’t make you happy, bit it does make you rich. First things first. We learned early: if someone joins you for the money, they’ll leave you for the money.

Your “AI lab” is your laptop, your teammate, your customers, and the loop between them. That’s enough, if you stay focused.

That’s it for today. If you’re a founder working on real-world AI - not research theater - build the star team, skip the noise, and let’s talk.

At StudioAlpha, we don’t just invest then then help you raise, we help you win. If you’re working on something smart, specific, and uncomfortably ambitious, we’d genuinely love to hear from you.

Best,

Fab in Zurich

StudioAlpha Team | LinkedIn | X

Appendix: 🎯 Top AI Talent Movers in Meta’s Recruitment Blitz

Let’s see who has been poked away to Meta. But first what roles are you looking for in general.

Key Roles in a High-Functioning AI Team

1. AI Research Scientist: Drives innovation through new models, algorithms, and experiments. → Curious, technically brilliant, and future-facing.

2. ML / AI Engineer: Turns research prototypes into production-ready systems (often includes MLOps). → Pragmatic builders who ensure things actually work.

3. Data Engineer: Builds and maintains clean, scalable data pipelines and infrastructure. → Detail-obsessed, systems thinkers who make everything flow.

4. Data Scientist: Analyzes data, runs experiments, and links findings to business outcomes. → Insightful storytellers with domain fluency.

5. AI / ML Architect: Designs the overall system to ensure scale, performance, and reliability. → Strategic integrators who connect the dots.

6. Solutions Engineer / Developer Advocate: Translates AI capabilities into customer value; supports product adoption. → Outgoing communicators who bridge tech and users.

7. Ethics & AI Governance Lead: Mitigates bias, ensures model safety, and handles regulatory concerns. → Empathetic and ethically grounded team conscience.

8. Chief AI Officer (CAIO) / AI Leadership: Sets AI strategy, aligns teams, and owns long-term direction. → Visionary execs who balance innovation with risk.

Top AI Talent Movers in Meta’s Recruitment Blitz

Alexandr Wang — Co-founder & CEO of Scale AI, headhunted to lead Meta’s superintelligence team as part of a $14.3B investment

Lucas Beyer — OpenAI Zurich; formerly at DeepMind; joined Meta

Alexander Kolesnikov — Also from OpenAI Zurich/DeepMind; part of Meta’s poach

Xiaohua Zhai — Third member of the Zurich trio to join Meta’s AI group

Shengjia Zhao — GPT‑4 expert from OpenAI; reportedly followed to Meta

Shuchao Bi — Multimodal model lead at OpenAI, now at Meta

Jiahui Yu — Former Google DeepMind & OpenAI researcher, moved to Meta

Hongyu Ren — Post-training lead for OpenAI’s minified models; joined Meta

Trapit Bansal — Key OpenAI AI reasoning researcher; recently joined Meta

Jack Rae — DeepMind researcher wooed by Meta

Johan Schalkwyk — Former Sesame AI, also recruited

Daniel Gross — Co-founder of Safe Superintelligence; targeted by Meta after failed acquisition

Nat Friedman — Ex‑GitHub CEO, also recruited into Meta’s AI fold

Noam Brown — OpenAI lead poaching attempt (reportedly resisted)

Koray Kavukçuğlu — Google’s AI architect (poaching attempt)

I made this list with ChatGPT 4-turbo, two days ago. Yesterday, The Information did do their homework as well.

Who Are These People, Really?

What these AI superstars have in common? (FT, WSJ).

Let’s map out the typical persona, the DNA of someone who makes it onto Meta’s list (or should make it onto yours):

PhD-level rigor – Often with deep math or physics backgrounds

Early bets – They were working on vision, speech, or transformers when nobody cared

Published & cited – Real breakthroughs in top conferences (check Google Scholar)

Interdisciplinary fluency – They can jump from algorithms to ethics to UX design critiques

Tribal networks – They know each other, read each other, and often move in groups (e.g., Beyer/Zhai/Kolesnikov > see appendix at the end of the blog)

Product-agnostic, problem-obsessed – They don’t chase the hype cycle. They chase the edge.

But here’s the kicker: they’re not just in it for the money. According to Berkeley professor Alexei Efros, “My students don’t want to get rich—they want to solve interesting problems.”

What does that mean for you? Culture and mission still matter. A lot.

Meta’s “Big Bets” Strategy

Massive packages: Total compensation (mostly equity over several years) reportedly reaching up to $100 million for top-tier hires (WSJ, Business Insider).

OpenAI reaction: Sam Altman publicly claimed none of OpenAI’s top people had jumped ship—but researchers like Beyer, Zhao, and Ren disputed that narrative.

Combination tactics: Meta isn’t just chasing researchers—it’s also targeting founders and operators (Wang, Gross, Friedman), signaling a wide-spectrum approach to building its AGI lab (Inc).

Sources

https://www.wsj.com/tech/meta-ai-recruiting-mark-zuckerberg-openai-018ed7fc

https://www.theinformation.com/articles/zuckerbergs-new-ai-team-good?rc=dlouky

https://www.wsj.com/tech/meta-ai-recruiting-mark-zuckerberg-openai-018ed7fc

https://www.nytimes.com/2025/06/10/technology/meta-new-ai-lab-superintelligence.html

https://www.businessinsider.com/ex-openai-researcher-meta-not-give-100-million-signing-bonus-2025-6

https://www.reuters.com/business/meta-hires-three-openai-researchers-wsj-reports-2025-06-26/

https://arxiv.org/abs/2503.05628

https://keepthefuturehuman.ai/chapter-4-what-are-agi-and-superintelligence/?utm_source=chatgpt.com

https://www.ibm.com/think/topics/artificial-general-intelligence?utm_source=chatgpt.com

https://hai.stanford.edu/news/what-expect-ai-2024?utm_source=chatgpt.com

https://nickbostrom.com/papers/survey.pdf?utm_source=chatgpt.com

Interview Nick Bostrom

https://www.wsj.com/podcasts/tech-news-briefing/tnb-tech-minute-meta-announces-superintelligence-division/2973af80-40c5-4387-aa54-8e0a5a370ce1

https://openai.com/index/planning-for-agi-and-beyond/

https://www.anthropic.com/news/core-views-on-ai-safety

https://techcrunch.com/2024/10/03/even-the-godmother-of-ai-has-no-idea-what-agi-is/?utm_source=chatgpt.com

Speech

elaborated with ChatGPT 4 Turbo

https://www.sequoiacap.com/article/generative-ai-a-creative-new-world/

https://a16z.com/ai/

https://www.ft.com/content/9e9fde8e-37bd-477d-8ebc-6d0a8b70f246

https://www.psg.fr/en

I might actually take some of this seriously if you didn't sign me up for this newsletter without my knowledge or consent:

"Welcome to StudioAlpha"

"Don’t recognize this sender? Unsubscribe with one click"

"Lukas Rüfenacht recently imported your email address from another platform to Substack. You'll now receive their posts via email or the Substack app. To set up your profile and discover more on Substack, click here."