Has MIT Opened Pandora’s Box on AI Being a Bubble?

MIT dissected 300 projects and found almost all dead. What does that tell us about our society, and what can founders learn?

Once upon a time - that is to say Wednesday, August 20, 2025: U.S. tech stocks and AI-linked equities suddenly tumbled. No new policy announcements, no surprise earnings misses. The trigger? A fresh MIT study1 that rattled confidence in AI’s future.

Bloomberg Tech2 jumped on it, covering the fallout daily and even circling back a week later when Nvidia reported earnings last Friday, August 27th. What looked like a routine dip turned into a recurring headline: something about AI isn’t adding up.

Initially, we thought: “Another blog on this? Everyone’s already talking about it.” But — probably unintentionally — this report reveals something deeper and more enduring. Something that comes up again and again in our conversations with startups. Not just about AI, but about the real secret of business success: innovation management.

TL;DR? Jump straight to Act 5.

Act 1 – The Crime Scene

It was a quiet summer in the markets when the shock hit: MIT released a report with a headline number so brutal it felt like a crime scene: 95% of all enterprise AI projects are failing. Tens of billions of dollars - gone. Pilots that never scaled. Promises that never made it to the P&L.

The report called it the GenAI Divide - STATE OF AI IN BUSINESS 20253: a handful of winners making millions, while everyone else is stuck in endless pilots.

The fallout was immediate. The Nasdaq stumbled.4 Nvidia’s shiny quarterly earnings, usually the headline act, were suddenly overshadowed.5 Palantir and other AI darlings slipped.6 Bloomberg Tech made it the story of the week.

The mood turned fast: was AI just another bubble? Had the dream of the “next industrial revolution” already bled out on the floor?

The crime scene was set. All the evidence pointed to a failed promise, and the headlines rushed to frame the case. But as with every good mystery, first impressions are rarely the whole story.

The investigators at MIT didn’t just guess. They reviewed 300+ AI projects, interviewed leaders at 52 organizations, and surveyed 153 senior executives across industries. The Information added some criticism: The study neither identified any of the 52 firms surveyed nor the individuals interviewed.7 However.

The verdict was brutal:

Despite $30–40 billion invested, 95% of generative AI pilots created zero measurable business value.

Only a small group - about 5% of cases - managed to turn AI into millions in savings or new revenue.

So what went wrong?

Adoption without transformation.

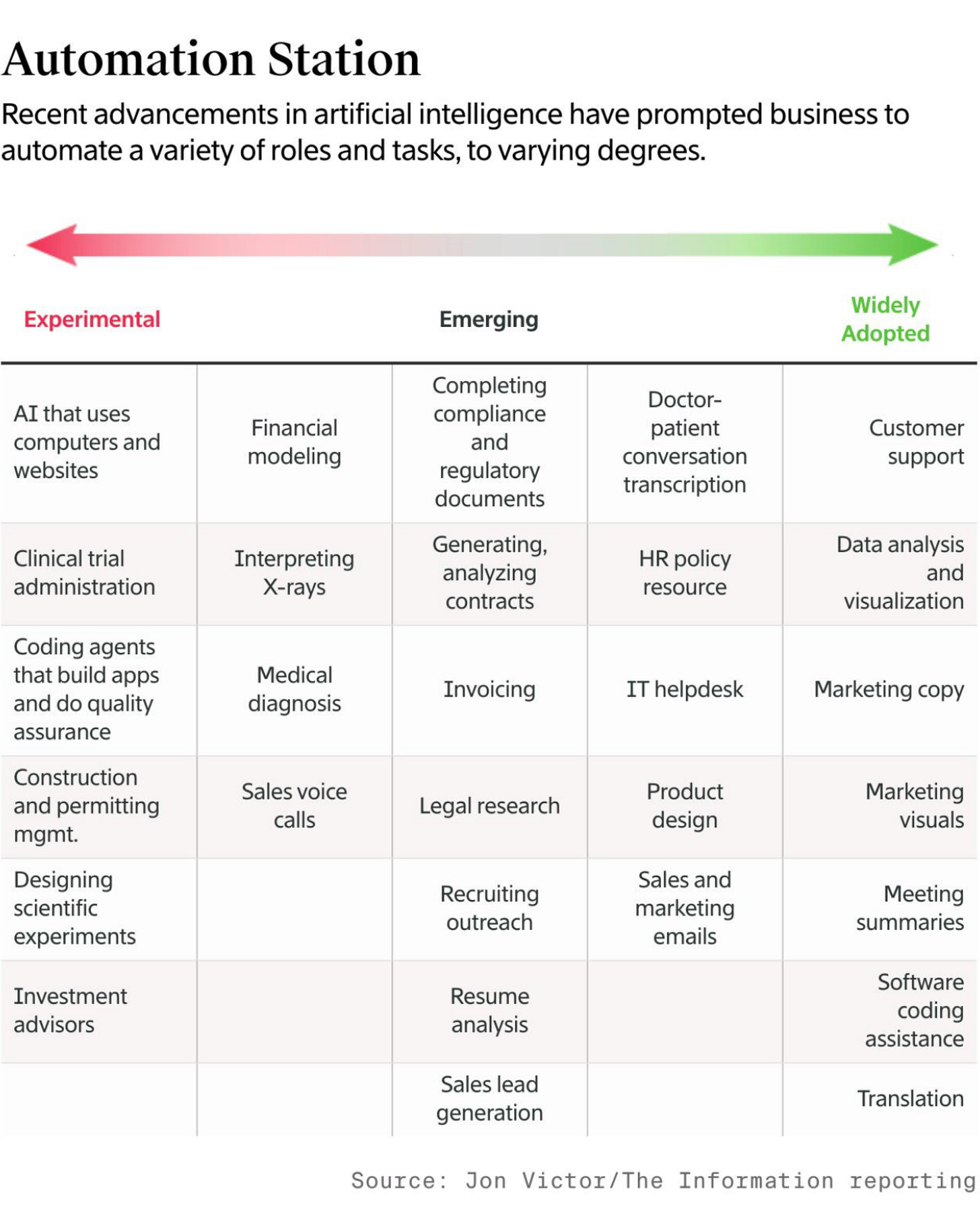

Tools like ChatGPT and Copilot spread fast - 80% of companies tested them, and nearly 40% deployed them. But they mostly boosted individual productivity, not company profits. Enterprise-grade AI systems fared worse: 60% evaluated them, only 5% made it to production.Pilots that die young.

MIT calls it the “pilot-to-production chasm”: demos look great, but once integrated into real workflows, most systems collapsed. Brittle tools that don’t remember context or adapt quickly end up abandoned.The shadow AI economy.

While official projects stall, employees quietly use personal ChatGPT or Claude accounts to get work done. Over 90% of employees surveyed admitted using consumer AI tools daily, even if their company had no official license.The paradox of investment.

Companies spent heavily on 💅 flashy front-office pilots (70% of budgets went to sales & marketing AI), while back-office automation - where ROI is often higher - got little attention.

The report even mapped industries:

Winners so far (5%): Tech and Media showed real structural change.

Everyone else (95%): Finance, healthcare, energy, manufacturing — busy with pilots, but no deep transformation.

The crime scene looked clear: billions invested, near-zero returns, pilots stalling, employees bypassing corporate systems. The MIT team gave this failure a name: the GenAI Divide.

Act 2 – The Investigators

Every crime story needs detectives. In this case, the investigation was led not by consultants chasing headlines 👨🏼💼, but by one of MIT’s most respected innovators: Ramesh Raskar.

Raskar is a professor at the MIT Media Lab, head of the Camera Culture group, and one of the most prolific inventors in his field - with more than 100 patents and breakthroughs like femto-photography, a camera that can literally “see around corners.” He’s a winner of the Lemelson-MIT Prize, often called the “Oscar for inventors”.

Alongside him stood the MIT NANDA team - Project Networked Agents and Decentralized Architecture. Their mission isn’t just to analyze AI adoption, but to build the plumbing for the next generation of AI systems: agentic architectures, memory frameworks, and protocols like Model Context Protocol (MCP) and Agent-to-Agent (A2A) that allow AI agents to learn, remember, and coordinate. The other investigators are:

Aditya Challapally - lead author of the report, researcher at MIT NANDA, the one who pulled together the data from 300+ projects.

Pradyumna Chari - postdoc at the Media Lab, co-leading the enterprise adoption research.

Chris Pease - researcher and teacher inside NANDA, focusing on “agentic web” protocols.

These aren’t outsiders commenting from the sidelines. They are the architects of the very systems that could solve the failures they documented. Their credibility is what gave the report such weight: the people building the future are the same ones warning that most of today’s AI efforts are stuck in dead ends.

So when their report declared that 95% of GenAI projects fail not because of regulation or model quality, but because tools don’t learn, adapt, or fit into workflows, the markets listened.

The detectives had filed their report. The crime scene was documented. And the world had to reckon with the uncomfortable truth.

Act 3 – The Drama

No crime goes unnoticed and this one set off a storm.

As soon as the MIT report hit, the media rushed in like crime reporters on deadline. Headlines painted the scene in stark colors:

FT ran with the market angle: U.S. tech stocks, which had been rallying since spring, suddenly slipped after the MIT findings, dragging down names like Nvidia and Palantir.8

Investors.com (IBD) warned that AI darlings were sliding because the report showed 95% of GenAI pilots fail. Palantir down 3.6%, Nvidia off 3.5%. The headline: investors spooked.9

Times of India echoed the bubble fears, comparing the hype-to-reality gap in AI to the dot-com crash.10

But not everyone cried apocalypse.

Forbes framed it as a wake-up call: leaders need to focus on use-case fit, not flashy demos, if they want GenAI to cross into real business value.11

Medium and Digital Commerce 360 amplified the message: adoption is high, but transformation is rare; back-office automation is where the hidden ROI lies.1213

And then came the skeptics:

The Tokenist accused the press of oversimplifying the study into a clickbait line — “95% fail.” They noted that MIT later restricted access to the report, worried about misinterpretation.14 > A you might have realized, it is live again.

Futuriom went further, calling the numbers sensationalized and the methodology opaque.15

Still, the drama wouldn’t die down. For two full weeks, Bloomberg Tech circled back to the story in daily coverage, even after Nvidia’s last weeks earnings on August 27. The narrative stuck: if the smartest people at MIT say 95% of GenAI projects fail, maybe AI isn’t the sure bet Wall Street thought.

The tension was thick: Was this the start of an AI bubble bursting? Or just the messy middle of every innovation story?

Act 4 – The Bridge

At this point in every crime story, the question hangs in the air: Has Pandora’s Box been opened?

The evidence looked damning. Markets shaken. Headlines screaming “95% failure.” Executives whispering about an AI bubble. If you stopped here, you’d think the case was closed - the AI dream, dead on arrival.

But here’s the twist: MIT didn’t kill the dream. They just documented the mess. What looks like catastrophe is actually something more familiar - a pattern.

Every major technology leaves behind a trail of failed experiments before it transforms the world. The internet had its dot-com bust. Mobile apps had their graveyard of “next big things.” Even Jira, today a enterprise staple, started as a guerrilla tool teams bought from their coffee fund.

The MIT report isn’t Pandora’s Box. It’s the autopsy report on the first wave of experiments - the prototypes, the wrappers, the pilots that were never meant to last.

To really understand why this is not the end, we have to leave the crime scene and step into the forensic lab. That’s where the theories come in - the frameworks that show us how new technologies diffuse, stumble, and finally break through.

Act 5 – The Theories (The Forensics)

Every detective story has that moment when the lab results land on the desk. The fingerprints, the blood tests, the hard science. Here, our “forensics” aren’t DNA samples but the classic theories of how new technologies spread - and why they stumble before they scale.

Exhibit A: Diffusion of Innovation (DOI)

Neutral explanation:

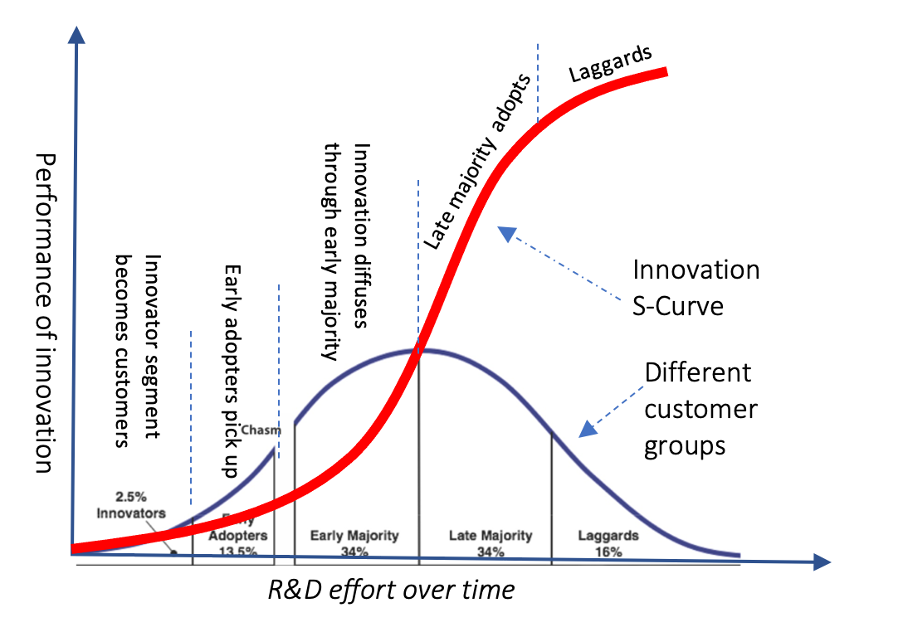

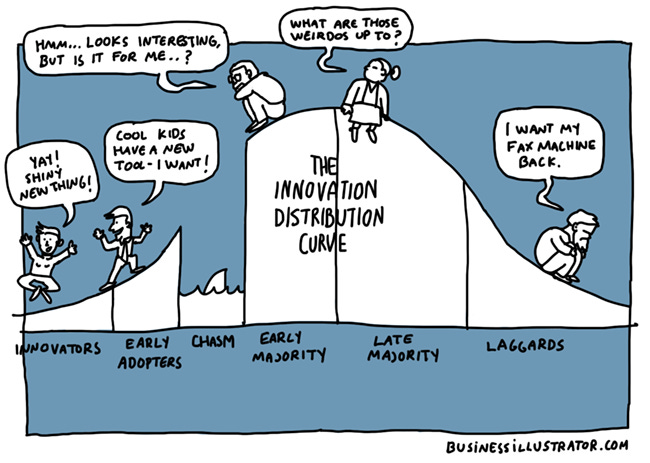

Everett Rogers mapped how innovations spread through society in stages: innovators, early adopters, early majority, late majority, and laggards. Adoption follows an S-curve: slow at first, then acceleration, then saturation.16

Applied to MIT findings:

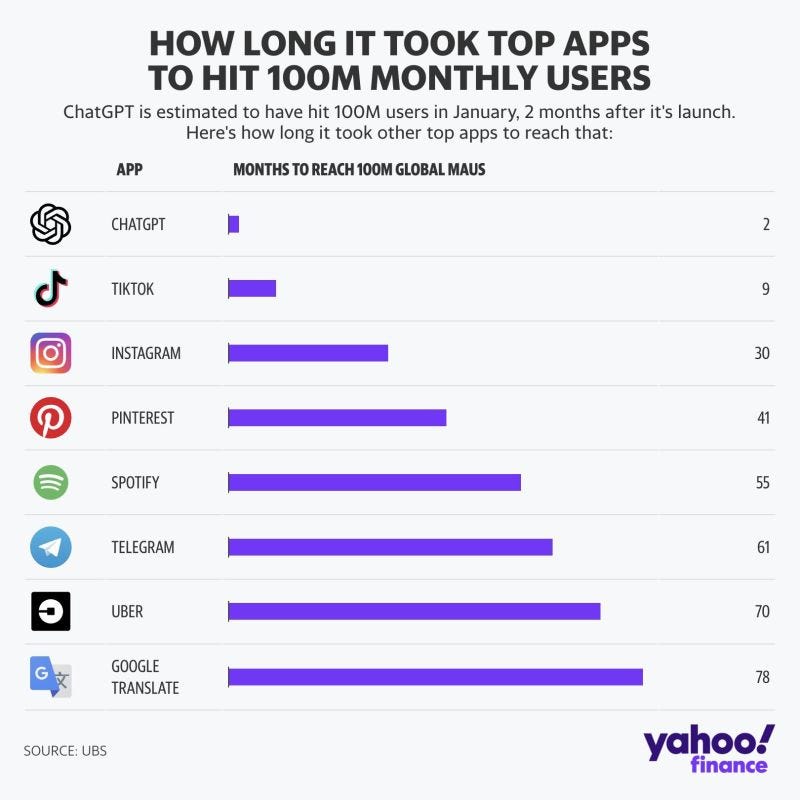

Most enterprises are still stuck at the left side of that curve. They’ve heard of GenAI, they’ve run pilots - but they haven’t crossed into scaled adoption yet. That explains why the MIT team found 95% of projects fail. It’s not unusual - it’s exactly what happens early in every diffusion cycle.

Lesson for founders:

Don’t measure your progress against enterprise adoption. Big-company managers only move once a technology is safely on Gartner’s “plateau of productivity” (Gartner Hype Cycle).17 Founders need to think in years, not quarters. Enterprise managers rarely do - their bonuses and career risk are tied to short-term results. That’s why they wait until the tech is de-risked and politically safe before scaling.

That’s why you should start by selling into SMB/SMEs (Small- to Medium-sized Enterprises). And if you target enterprises, do it guerrilla-style. Jira is the classic case: it spread from team coffee funds, while CIOs had no clue — years before enterprises standardized on it (Atlassian history).18

Exhibit B: Technology Acceptance Model (TAM)

Neutral explanation:

Fred Davis showed that people adopt a technology if they perceive it as useful and easy to use. These two dimensions - perceived usefulness (PU) and perceived ease of use (PEOU) - are still the strongest predictors of user acceptance.19

Applied to MIT findings:

ChatGPT ticks both boxes. It feels useful and easy - which is why employees use it daily, often without approval. Enterprise AI pilots fail the test: clunky UI, brittle integrations, and high training needs. The MIT study confirmed this gap by showing a shadow AI economy where over 90% of employees rely on consumer tools while official projects stall.

Lesson for founders:

Forget feature bloat and over-engineered demos. If your product isn’t obviously useful and dead simple, adoption won’t happen. Build for workflow fit and ease, not just technical sophistication. The TAM lens is clear: if it passes “useful and easy,” people will adopt - even behind IT’s back.

Exhibit C: Crossing the Chasm

Neutral explanation:

Geoffrey Moore added a twist to Rogers’ adoption curve: between the early adopters (visionaries) and the early majority (pragmatists) lies a dangerous gap - the chasm. Visionaries love shiny new tools. Pragmatists demand proof, references, and reliable ROI before they move.20 Many technologies die here.

Applied to MIT findings:

That’s exactly where GenAI is stuck. Visionaries inside enterprises have launched pilots, but pragmatists don’t yet see trustworthy ROI or integration into core workflows. The MIT study showed the result: lots of pilots, almost no production. The “95% failure rate” is a textbook chasm problem.

Lesson for founders:

Your challenge isn’t winning visionaries - they’re already excited. It’s convincing pragmatists. That means reference customers, clear ROI, and seamless workflow integration. Build the bridge across the chasm with credibility, not hype. The startups that cross it will leave the 95% of dead pilots behind and become the category leaders.

Exhibit D: Teece’s Dynamic Capabilities

Neutral explanation:

Our friend (yes) David Teece argued that big companies don’t fail because they lack money or talent. They fail because they lack dynamic capabilities - the ability to sense opportunities, seize them, and reconfigure resources quickly in a changing environment.21

Applied to MIT findings:

The MIT study showed this clearly: startups moved from pilot to rollout in about 90 days, while enterprises needed nine months or more. By the time corporate pilots were ready, the energy was gone and the project shelved. That’s not a GenAI problem, it’s a capabilities problem.

Lesson for founders:

Your edge against giants is speed. Move faster than they can reorganize. Don’t try to “out-resource” an enterprise - outrun them. Ship quick pilots, close reference customers, and iterate. Dynamic capabilities aren’t optional for startups; they’re your superpower.

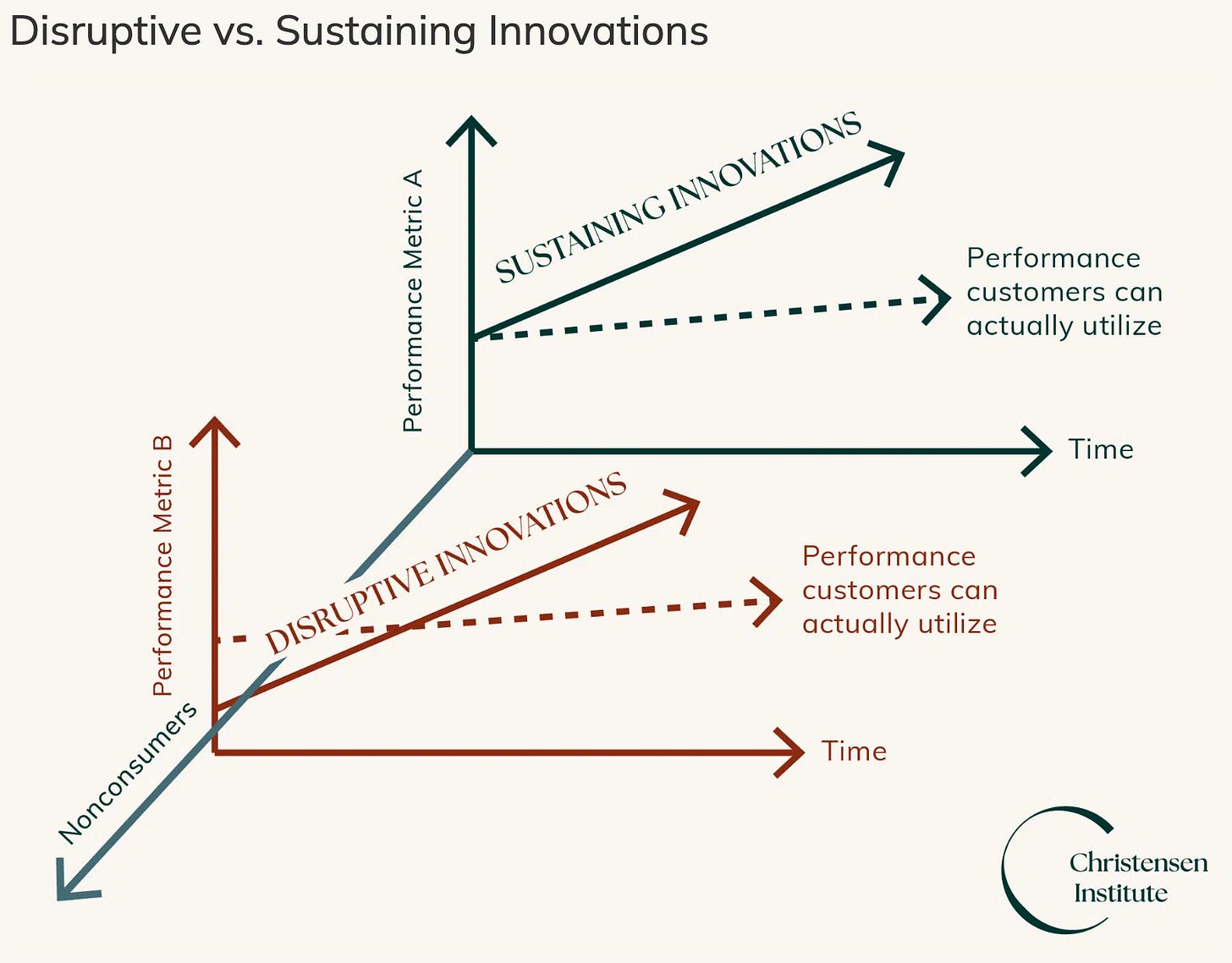

Exhibit E: Disruption Theory (Christensen)

Neutral explanation:

Clayton Christensen showed that true disruption doesn’t start at the top of the market. It begins with tools that are cheaper, simpler, and more accessible. They creep in at the low end, serving customers incumbents ignore. Over time, they improve, move up-market, and eventually topple the giants.22

Applied to MIT findings:

Enterprise GenAI projects don’t fit this pattern yet. They’re expensive, complex, and fragile - the opposite of accessible. That’s why 95% of them fail. But Christensen would say: that’s not the end of the story. As costs drop and usability rises, the true disruption will hit - and it won’t come from the million-dollar enterprise pilots. It will come from leaner, more accessible products.

Lesson for founders:

Don’t chase big-ticket enterprise deals first. Build tools that are affordable and accessible, even to small teams. Start at the edges, where incumbents can’t be bothered. That’s where disruption takes root. And when the technology matures, you’ll be the one moving up-market while the slow giants are still stuck in their pilot graveyards.

Act 6 – The Reveal (Why This Is Good News)

Every crime story needs a twist. Here it comes: the MIT report isn’t a death sentence for AI. It’s just Act One. Yes, 95% of projects failed - but that’s normal. It’s the trough of disillusionment, the graveyard phase that clears the way for the winners.

For founders, the real opportunity isn’t whether AI will create value. It’s where you place your bet in the business model. Look at the four levers:

1. Value Proposition – What you offer

AI isn’t about adding “AI” to your pitch deck. It’s about solving a real problem better, faster, or cheaper than anyone else. If your AI doesn’t shift the value proposition - clearer insights, faster execution, lower costs - it won’t survive the trough.

Founder focus: Craft a proposition where AI is the engine, not the gimmick. Make the value so obvious customers can’t dismiss it.

2. Value Delivery – Who you serve and how

The MIT report showed enterprises stall because they can’t deliver AI at scale. That’s an opening for startups. Small teams can target specific customer segments with tailored solutions - SMBs, niche industries, underserved users. Delivery is where agility beats bureaucracy.

Founder focus: Pick a target customer you can delight now. Own one vertical before chasing the whole enterprise market.

3. Value Creation – How you build

This is where the leadership problem shows up. AI can automate tasks, but if leaders don’t adapt processes or cut redundant headcount, the savings vanish. That’s not failed AI - that’s failed leadership.

The real danger isn’t that AI fails. It’s that leaders won’t act on what AI enables. If AI takes over a process, you need the courage to restructure - whether that means reducing headcount, killing vendor contracts, or changing workflows. Without those hard calls, the P&L impact never shows up. Then the next MIT study will chalk it up as “another failed AI pilot,” when in truth it was failed leadership.

Founder focus: Build tools that make change unavoidable. If your product removes 80% of a process, design it so leaders can’t dodge the decision. Force the savings to show up.

4. Value Capture – How you make money

The MIT graveyard is full of pilots with no revenue model. AI isn’t magic; it has to capture value through business logic. The winners are already proving it: OpenAI, Anthropic, and others are generating billions in ARR. The lesson: disruption only matters if you capture part of the value you create.

Founder focus: Align your pricing with customer ROI. If your product saves $1M, charging $100k feels like a bargain. Business models that scale with value delivered will outlast hype.

The final twist

The MIT report shows the bodies - but the crime wasn’t AI. The crime was inertia and weak leadership. For founders, that’s good news: the field is wide open. Build systems that reshape these four levers, and you’re not just avoiding the 95% graveyard - you’re building the next category leader.

And history reminds us:

Facebook wasn’t the first social network.

Google wasn’t the first search engine.

Apple didn’t invent the phone.

But they nailed their business models early.

GenAI will rhyme with that story. The tools are here. The levers are clear. The rest is execution.

🎧 Producer’s Note

When Rapper’s Delight dropped in 1979, people said: “That’s not music. Just a fad.”

Today? Rap is the highest-revenue genre in pop music … growing.

Same with AI: right now, most say “95% fail. It’s hype.”

Fast-forward - it will own the charts.

Done.

Best,

Fab 🕺🏽 & Prof. Andy 🎺

Sources

https://mlq.ai/media/quarterly_decks/v0.1_State_of_AI_in_Business_2025_Report.pdf

https://www.youtube.com/@BloombergTechnology/videos

https://mlq.ai/media/quarterly_decks/v0.1_State_of_AI_in_Business_2025_Report.pdf

https://www.ft.com/content/2c02b81b-076f-41fc-9971-5565a2648859?utm_source=chatgpt.com

https://fortune.com/2025/08/20/us-tech-stocks-slide-altman-bubble-ai-mit-study/?utm_source=chatgpt.com

https://www.investors.com/news/technology/artificial-intelligence-stocks-ai-stocks-mit-study/?utm_source=chatgpt.com

https://www.theinformation.com/articles/ai-native-apps-18-5-billion-annualized-revenues-rebut-mits-skeptical-study?rc=dlouky

https://www.ft.com/content/2c02b81b-076f-41fc-9971-5565a2648859?utm_source=chatgpt.com

https://www.investors.com/news/technology/artificial-intelligence-stocks-ai-stocks-mit-study/?utm_source=chatgpt.com

https://timesofindia.indiatimes.com/technology/tech-news/mit-study-finds-95-of-generative-ai-projects-are-failing-only-hype-little-transformation/articleshow/123453071.cms?utm_source=chatgpt.com

https://www.forbes.com/sites/andreahill/2025/08/21/why-95-of-ai-pilots-fail-and-what-business-leaders-should-do-instead/?utm_source=chatgpt.com

Correct — it almost looks like the journalists didn’t even bother with ChatGPT. They just copy-pasted straight from the MIT report or each other’s headlines.

https://www.digitalcommerce360.com/2025/08/25/mit-report-no-return-on-generative-ai/?utm_source=chatgpt.com

https://tokenist.com/the-real-ai-divide-how-media-misread-mit-and-moved-billions/?utm_source=chatgpt.com

https://www.futuriom.com/articles/news/why-we-dont-believe-mit-nandas-werid-ai-study/2025/08?utm_source=chatgpt.com

https://www.open.edu/openlearn/nature-environment/organisations-environmental-management-and-innovation/content-section-1.7?utm_source=chatgpt.com

https://www.gartner.com/en/research/methodologies/gartner-hype-cycle?utm_source=chatgpt.com

https://www.ebsco.com/research-starters/technology/technology-acceptance-model-tam?utm_source=chatgpt.com

https://en.wikipedia.org/wiki/Crossing_the_Chasm?utm_source=chatgpt.com

https://en.wikipedia.org/wiki/Dynamic_capabilities?utm_source=chatgpt.com

https://www.christenseninstitute.org/theory/disruptive-innovation/?utm_source=chatgpt.com